0x551 Decoder

1. Encoder-Decoder Model

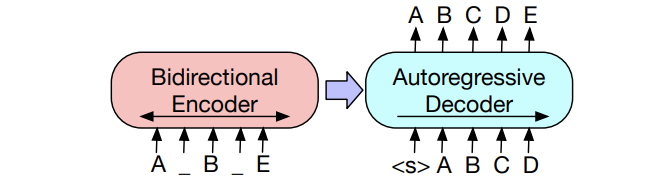

1.1. BART

Model (BART)

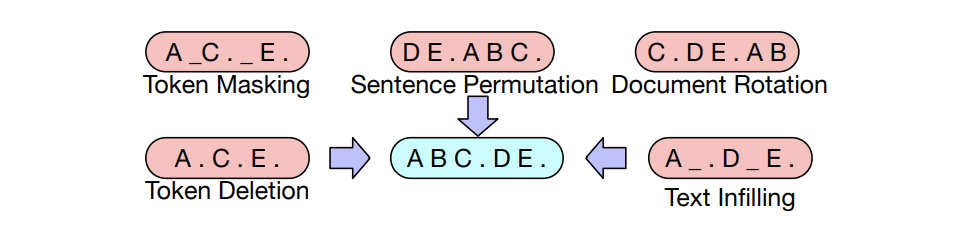

BART is a denoising encoder-decoder model trained by

- corrupting text with noise

- learn a model to reconstruct the original text

BART noise are as follows

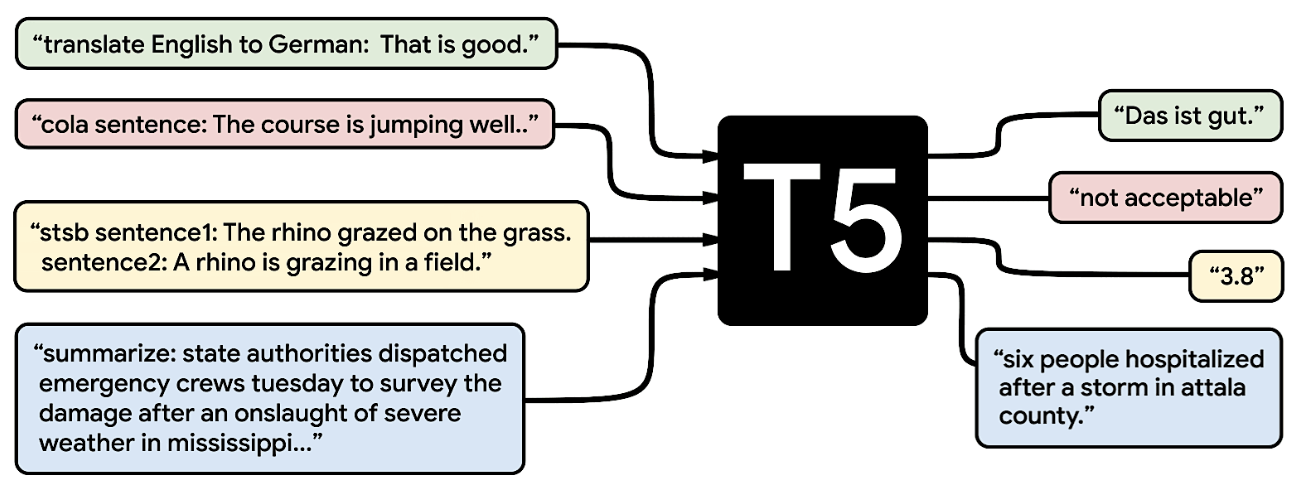

1.2. T5

Model (T5, Text-to-Text Transfer Transformer)

Also check the Blog

2. Decoder Model (Causal Language Model)

2.1. GPT

GPT is a language model using transformer. Check Mu Li's video

Model (GPT) 0.1B

Check the next section for details

Model (GPT2) 1.5B

Model (GPT3) 175B

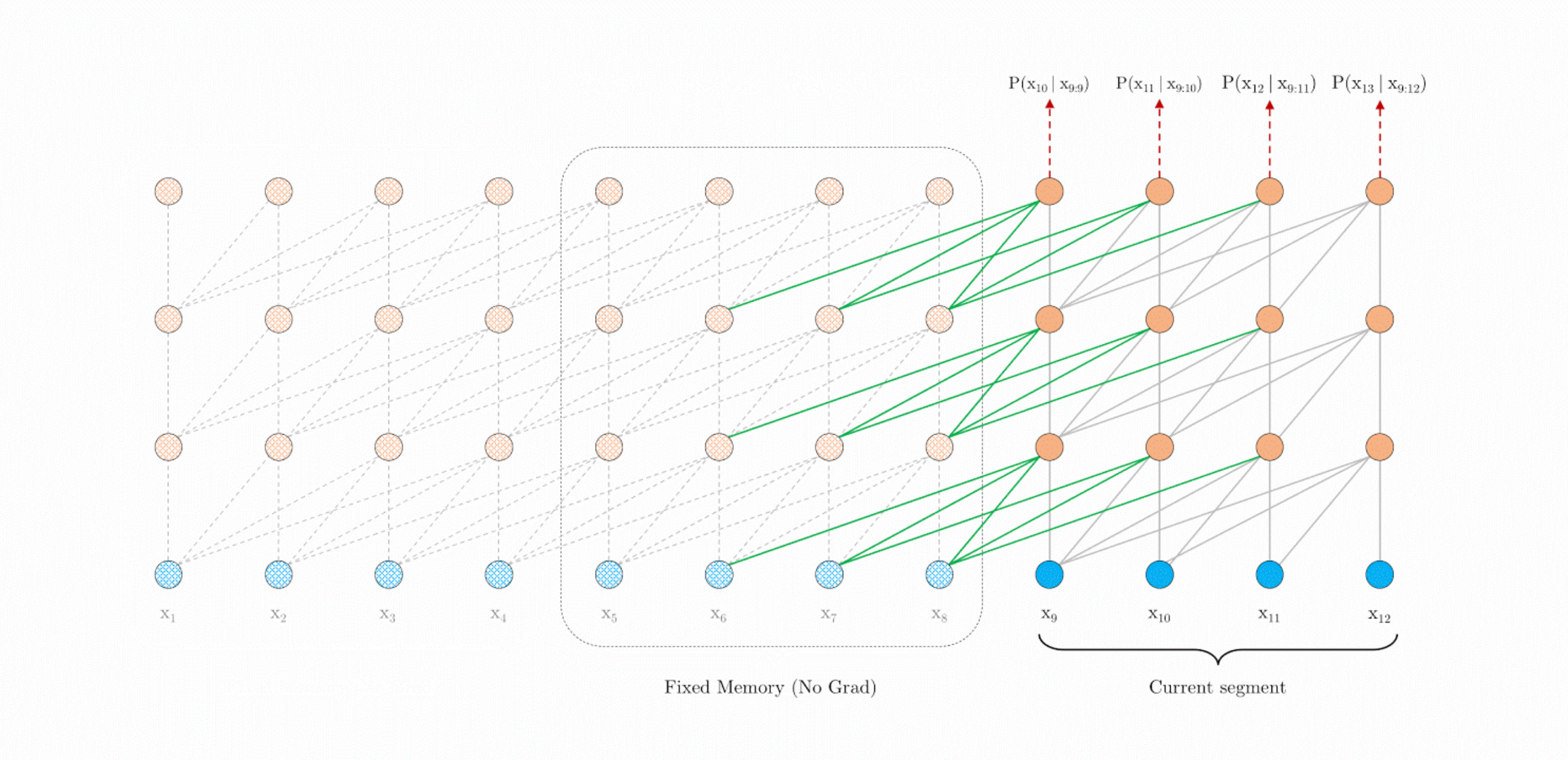

2.2. Transformer XL

Model (Transformer XL) overcome the fixed-length context issue by

- segment-level recurrence: hidden values of the previous segment is cached and provided to the next segment

- relative positional encoding: use fixed embedding with learnable transformation

See this blog

2.3. XLNet

Model (XLNet) Permutation language model

2.4. Distributed Models

Model (LaMDA) A decoder only dialog model

- pretrain on next word prediction

- fine-tuned using "context sentinel response" format

See this Blog

Model (PaLM, Pathway LM)

See this blog

3. Decoding

Naive ways to generate are

- greedy search: pickup the highest probability at each timestamp

- beam search: keep the top-k most likely hypothesis at each timestamp

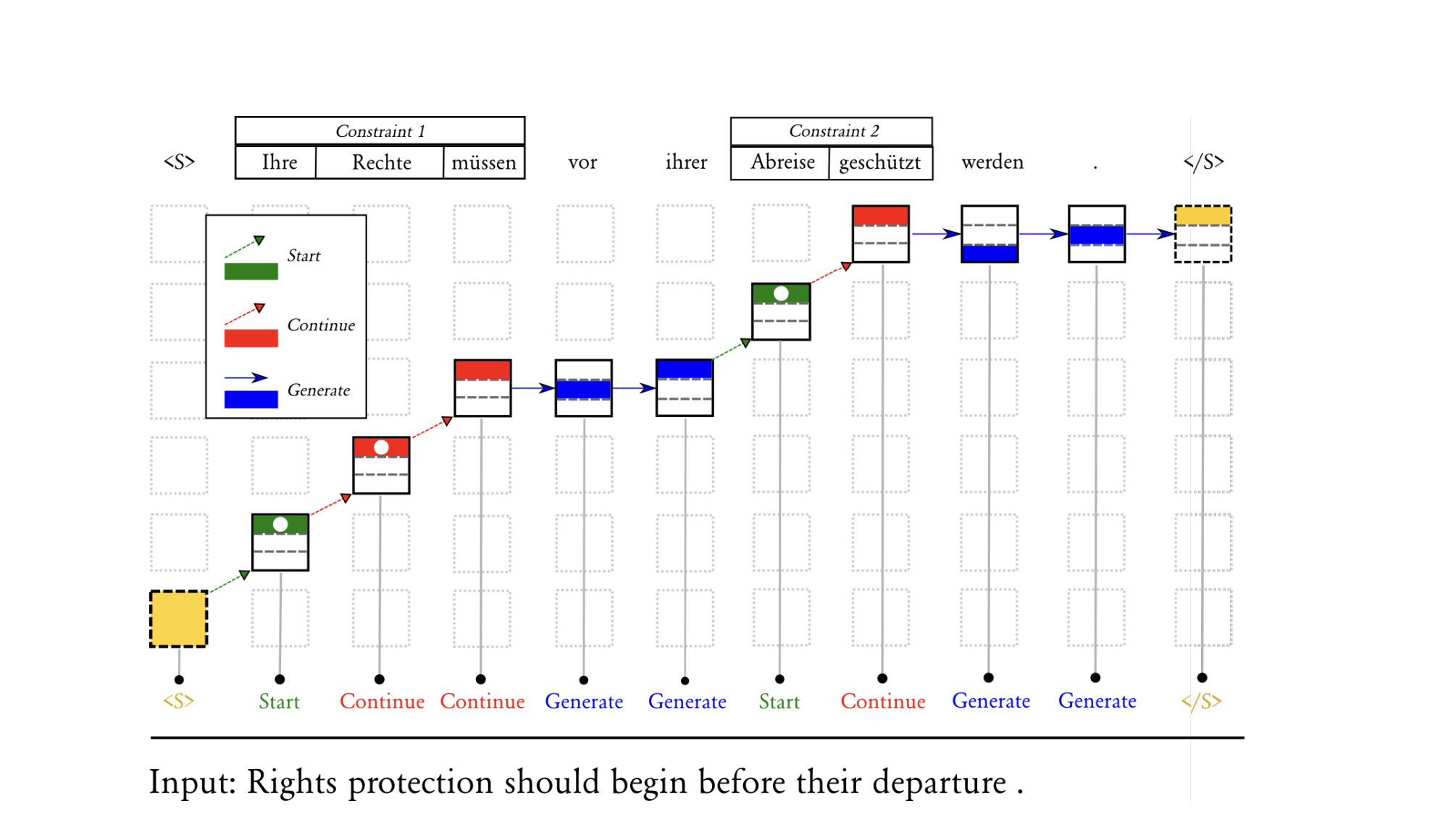

Model (grid beam search, lexical constraints) extend beam search to allow lexical-constraints with a new axis

check this huggingface blog

Model (nucleus sampling, top-p sampling) top-p sampling build the top candidates based on cumulative probabilities crossing a threshold:

then the probability mass is distributed within this set. Unlike a fixed top-k sampling, this is more adaptive to different distribution.

Speculative Decoding (Leviathan et al., 2023)1 sample an episode from a small model of \(q(x)\) and use the correct (large) \(p\) to decide which timestep to cut off

Model (Jacobi decoding) iteratively decode the entire sequence until convergence. This can be enhanced by combining with n-gram trajectory, lookahead decoding

4. Calibration

Model (confidence calibration) the probability associated with the predicted class label should reflect its ground truth correcteness

Suppose the neural network is \(h(X) = (\hat{Y}, \hat{P})\), where \(\hat{Y}\) is the prediction, \(\hat{P}\) is the associated confidence, a perfect calibration should satisfy

A measurement of calibrartion is ECE (Expected Calibration Error) defined as the difference between confidence and actual probability

Analysis (larger models are well-calibrated) larger models are well-calibrated in the right format

-

Yaniv Leviathan, Matan Kalman, and Yossi Matias. 2023. Fast inference from transformers via speculative decoding. In International conference on machine learning, pages 19274–19286. PMLR. ↩