0x531 Sequence

Using a standard network model (e.g: MLP model to predict output concats with input concats) to represent sequence is not good, we have several problems here

- inputs, outputs length can be variable in different samples

- features learned at each sequence position are not shared

- too many parameters

1. RNN

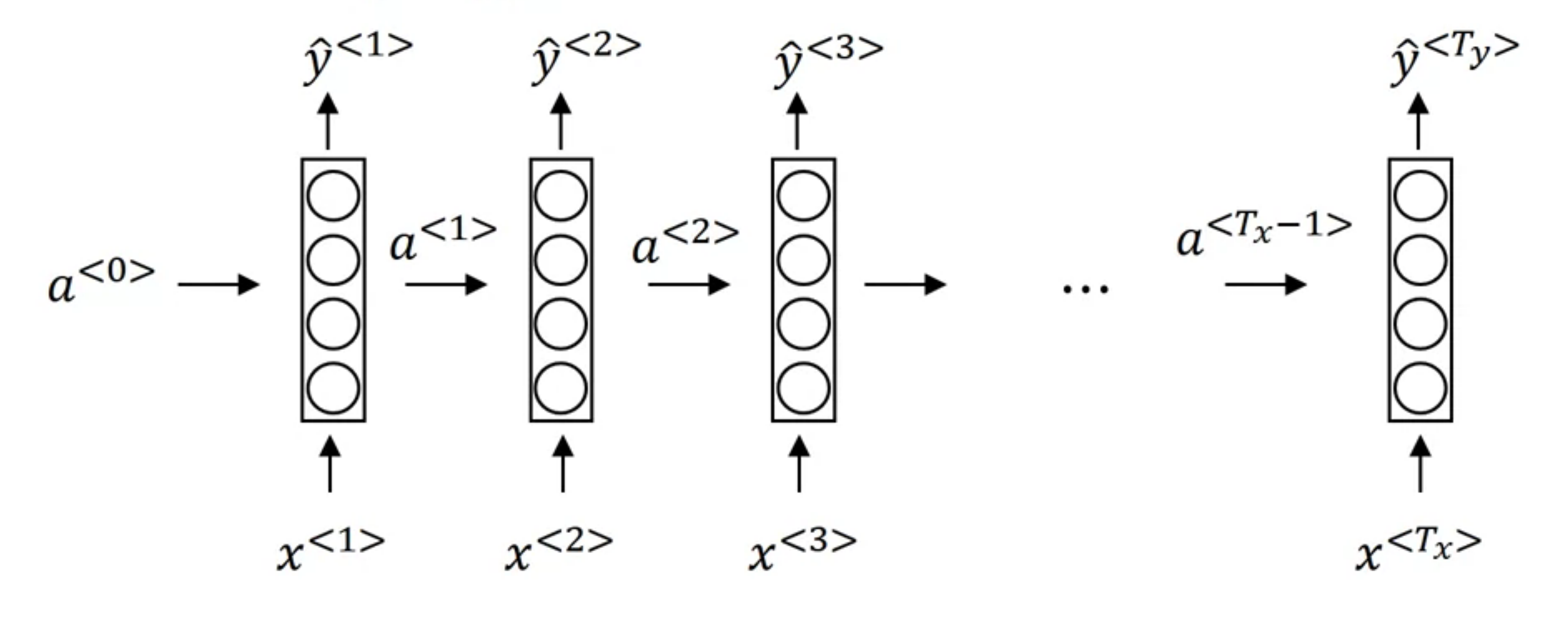

The Vanilla RNN has the following architecture (from deeplearning ai courses)

The formula are

1.1. Bidirectional RNN

This unidirectional vanilla RNN has some problem, let's think about an example of NER from the deeplearning.ai course.

- He said Teddy Roosevelt was a great president

- He said Teddy bears are on sale

It is difficult to make decision at the word Teddy whether it is a person name or not without looking at the future words.

1.2. Gradient Vanishing/Explosion

RNN has the issue of Gradient Vanishing and Gradient Explosion when it is badly conditioned. This paper shows the conditions when these issues happen:

Suppose we have the recurrent structure

And the loss \(E_t = \mathcal{L}(h_t)\) and \(E = \sum_t E_t\), then

the hidden partial can be further decomposed into

Suppose the nonlinear \(\sigma'(h_t)\) is bounded by \(\gamma\), then \(|| diag(\sigma'(h_t)) || \leq \gamma\) (e.g: \(\gamma = 1/4\) for sigmoid function)

It is sufficient for the largest eigenvector \(\lambda_1\) of \(W_{rec}\) to be less than \(1/\gamma\), for the gradient vanishing problem to occur because

Similarly, by inverting the statement, we can see the necessary condition of gradient explosion is \(\lambda_1 > \gamma\)

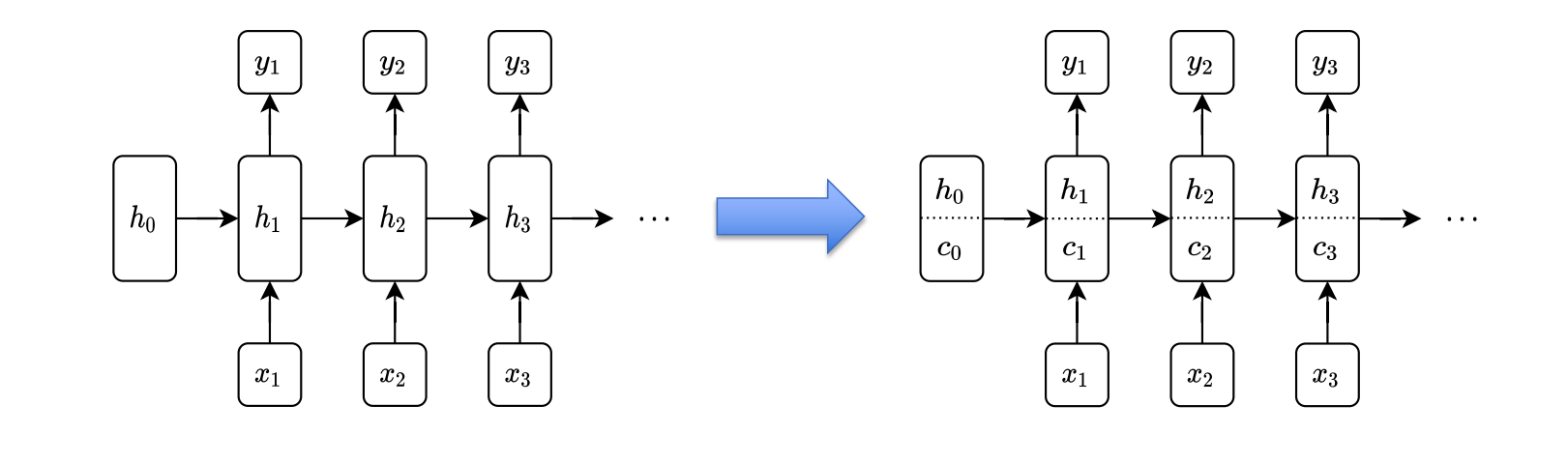

1.3. LSTM

Model (LSTM) Avoid some problems of RNNs

It computes the forget gates, input gate, output gates

The following one is the key formula, where the saturating \(f_t \in [0,1]\) will pass grad through \(c_{t}\) to \(c_{t-1}\)

2. Positional Encoding

2.1. Classical Encoding

See this blog for some intuition

Model (sinusoidal positional encoding) The original transformer is using the sinusoidal positional encoding

where \(\omega_0\) is \(1/10000\), this resembles the binary representation of position integer

- where the LSB bit is alternating fast (sinusoidal position embedding has the fastest frequency \(\omega_0^{2i/d_{model}} = 1\) when \(i=0\))

- But higher bits is alternating slowly (higher position has slower frequency, e.g. 1/10000)

Another characterstics is the relative positioning is easier because there exists a linear transformation (rotation matrix) to connect \(e(t)\) and \(e(t+k)\) for any \(k\).

Sinusoidal position encoding has symmetric distance which decays with time.

The original PE is deterministic, however, there are several learnable choices for the positional encoding.

Model (absolute positional encoding) learns \(p_i \in R^d\) for each position and uses \(w_i + p_i\) as input. In the self attention, energy is computed as

Model(relative positional encoding) relative encoding \(a_{j-i}\) is learned for every self-attention layer

2.2. RoPE

RoPE can be interpolated to extend longer context length (e.g. with limited fine-tuning)